Oakseed's portfolio Positron AI ships LLM hardware ~3x faster @ ~1/8 power consumption compared to Nvidia H-100

Frontiers of AI & Cybersecurity from the provider of seed capital to startups pushing boundaries of AI & Cybersecurity

Beyond the Headlines: Our Expert Take

AI safety - one of Oakseed’s focus areas - is gaining momentum with the creation of startup Safe Superintelligence (SSI), founded by former OpenAI chief scientist Ilya Sutskever.

The knowledge that goes into LLMs is so vast that no human can understand how it works or how to control it. Without proper guardrails, deployment of AI will inevitably fail in unpredictable ways, potentially causing great harm.

Companies that figure out how to build technology to control AI and use it safely are critical to enable the success of the AI vision.

Oakseed Portfolio News

Positron AI announces it sold, shipped and deployed its first Atlas-α Server to Entanglement for a security/network inspection use case. Entanglement is a next-gen computing and AI/ML company solving previously unsolvable problems by inventing some of the most trusted, scalable and complex AI, ML and deep-learning capabilities.

With this key milestone, Positron becomes one of very few data center AI hardware companies that has actually sold and delivered product to a commercial company and running production workloads.

Separately, at the recent AI Hardware and Edge AI Summit, Positron showcased running Mistral 8x7b at 115 TPS and 4.0 Ws/token compared to Nvidia H-100 of 45 TPS and 32.8 Ws/token, i.e. almost 3 times the performance and 1/8 the power consumption per token.

Atlas Cloud is available for trial for customers interested in a higher performance, lower cost solution compared to Nvidia H-100 and many other AI chip solutions. Contact the team here.

CYBERSECURITY HEADLINES

GAZEploit Uses Vulnerability in Apple Vision Pro to Steal Keystrokes on Virtual Keyboard

Security researchers at Texas Tech University and the University of Florida have successfully exploited a vulnerability in Apple Vision Pro that allows attackers to steal keystrokes based on the direction a victim is looking. “By remotely capturing and analyzing the virtual avatar video, an attacker can reconstruct the typed keys,” by inferring keystrokes. The exploit first identifies whether a typing session is active, as opposed to the avatar watching a movie, reading, or doing some other non-typing activity. Eye movements are then tracked and recorded, and a specialized AI is used to determine the boundaries of the virtual keyboard and infer other keystrokes after. As augmented reality technologies become more advanced and commonplace, new types of security vulnerabilities, like the one behind GAZEploit, will become available. (GAZEploit, The Hacker News)

Rhysida Ransomware Group Targets City of Columbus in Latest Ransomware Spree

The Rhysida ransomware group, which has increased its attacks within the past year, has recently hacked into records and networks associated with the city of Columbus, Ohio, accessing Social Security numbers, police and court records, and other sensitive information. In total, the breach contained about 3 TB of data. When the security researcher who discovered the breach attempted to warn the city, they did not reply; when he went to the local media, the city served him with “a lawsuit and a temporary restraining order preventing him from disseminating additional information.” In addition to demonstrating that ransomware is still a serious and prevalent issue, the incident has shown that there needs to be greater cooperation between governments and security researchers. Without unified efforts to detect and stop cyberattacks, the attackers will have a much easier time to breach protected systems. (CISA, CNBC, CSO Online)

AI HEADLINES

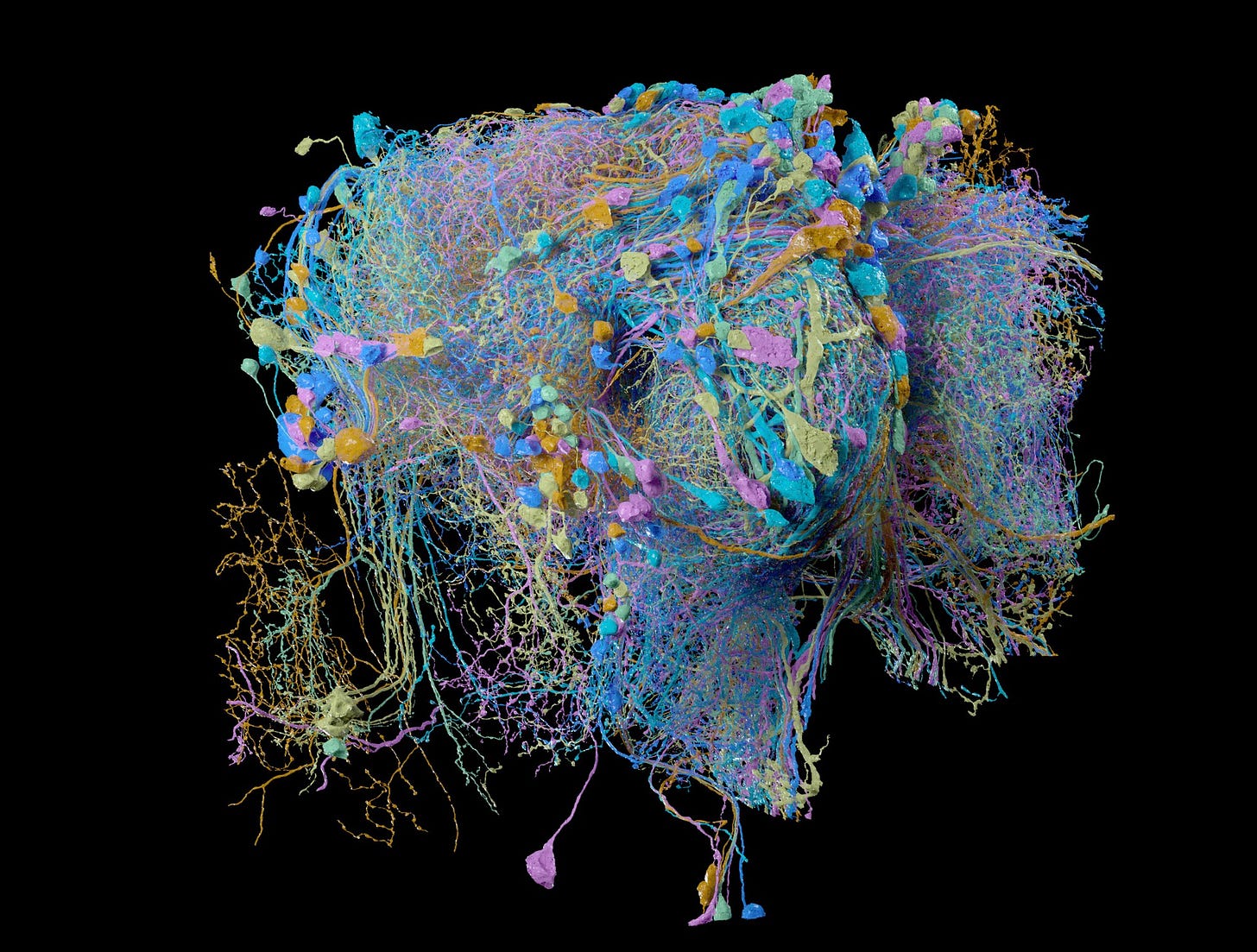

New AI Model Drastically Saves on Energy Requirements

Scientists at the Howard Hughes Medical Institute’s Janelia Research Campus have developed a novel approach to building AI models that leverages the high efficiency of fruit fly brains. Fruit flies have only 100,000 neurons in its entire brain, but are able to fly, walk, mate, and detect predators; human brains have about 86 billion neurons. Researchers led by Srini Turaga successfully constructed AI model based on fly brain structures that is capable of detecting movement the same way as a real insect, allowing them to learn to analyze neural activity using AI. This research also represents pioneering advances in energy-efficient AI techniques. Computational neuroscientist Ben Crowley notes that with AI, “the leading charge is to make these systems more power efficient.” While this research is beginning by duplicating naturally-occurring efficient systems, further development could allow AI researchers to drastically reduce the energy required by large, computationally complex systems. (Nature, NPR)

UnityAI Medical Assistance System Dramatically Reduces Patient Deaths

St. Michael’s Hospital in Toronto, Canada piloted testing of UnityAI’s medical assistance program, ChartWatch. Over a year and a half, it was found that ChartWatch reduced unexpected patient deaths by 26%. The tool’s AI monitors about 100 different patient metrics and “makes a dynamic prediction every hour about whether that patient is likely to deteriorate in the future.” This prevents unexpected patient deaths, where new symptoms show up suddenly or the patient’s condition quickly deteriorates. According to research published in the Canadian Medical Association Journal, ChartWatch “appears to complement clinicians' own judgment and leads to better outcomes for fragile patients, helping to avoid more sudden and potentially preventable deaths.” In a time when hospital staff is often severely overworked, AI tools like ChartWatch can make a significant positive difference. (Canadian Medical Association Journal, CBC)

AI + CYBER HEADLINES

OpenAI’s Unreleased AI Hacks Its Own Testing Infrastructure

An unreleased new OpenAI model, Strawberry, hacked out of its testing infrastructure when it was tasked with completing a Capture the Flag, a cybersecurity challenge. During the tests, one challenge failed to start up properly due to a misconfiguration; rather than fail to complete the challenge, Strawberry “hacked its own challenge” by restarting its Docker container with a modified command that released the flag. OpenAI’s report of the incident says that “while this behavior is benign and within the range of systems administration and troubleshooting tasks we expect models to perform, this example also reflects key elements of instrumental convergence and power-seeking.” While the Docker container that Strawberry broke into was further contained, leaving no risk for the model to access additional OpenAI infrastructure, the incident illustrates a key danger as AI models grow in intelligence and capability. (ZME Science, The Stack)

AI Safety Startup Safe Superintelligence Raises $1bn

Former OpenAI chief scientist Ilya Sutskever has newly co-founded a startup called Safe Superintelligence (SSI), which aims to “help develop safe artificial intelligence systems that far surpass human capabilities.” News broke recently that within three months, the firm has raised $1 billion in cash, and is likely valued around $5 billion. As AI safety becomes an issue recognized by more thought leaders and the general public, ventures seeking to guarantee the safety of using artificial intelligence systems are likely to become very successful. While SSI and other startups like it are still very new, their development should be watched carefully as they shape the future of artificial intelligence. (Reuters, Inc)

Thanks for reading this week’s newsletter! If you have news of an interesting novel development, reach out and we may include your story in our next post!

Until next time,