Massive Hack of US Telecommunications Infrastructure, Groundbreaking AI Training Techniques Discovered, and First Instance of AI Disclosure of Zero-Day Vulnerability

Frontiers of AI & Cybersecurity from the provider of seed capital to startups pushing boundaries of AI & Cybersecurity

Happy Holidays

Oakseed wishes everyone happy holidays and a wonderful 2025 ahead!

Oakseed News

We successfully organized an AI Demo Day showcasing some of our portfolio companies (Positron AI, Featherless AI, Fleak, Daxa, Choir AI and Celeritas AI) followed by a discussion with a few of their customers (including AstraZeneca and SAP). Close to 100 later stage VCs and LPs attended and got a view of where AI is headed, across infrastructure to applications and cybersecurity. Let us know if you would like to receive a recording.

Beyond the Headlines: Our Expert Take

As AI grows in influence and reach, it becomes more important to manage the safety of the users and systems depending on it. To that end, our AI Headlines this issue focus on two groundbreaking new techniques for managing the safety of AI models: discovering and removing bias, and teaching models to forget sensitive or dangerous training data. As techniques like these are discovered and perfected, AI developers can use them to confidently build systems that safeguard the reliability and correctness of models while minimizing risk to users.

CYBERSECURITY HEADLINES

Salt Typhoon Hacker Group Infiltrates United States Telecommunications Infrastructure

This past week saw what “likely represents the largest telecommunications hack in [the United State]'s history” when a threat group dubbed Salt Typhoon by Microsoft Threat Intelligence was found to have infiltrated at least eight telecommunications and infrastructure firms, stealing phone records and metadata of an unknown number of users. The attackers also targeted “high-value intelligence targets related to U.S. politics and government,” including those associated with the presidential campaigns for Donald Trump and Kamala Harris. Reportedly, these high-value targets were also subject to phone and communications wiretapping. Individuals have been encouraged by the FBI and CISA to use end-to-end encryption for communications, such as by using WhatsApp or Signal. (Reuters, NBC, The Register)

Millions of Windows Users Vulnerable to New Zero-Day Memory Security Vulnerability

A new, actively-exploited zero-day vulnerability was recently discovered, and Windows has released a patch in this month’s Patch Tuesday fixes. All Windows users are highly encouraged to apply the latest updates as soon as possible. The vulnerability has been dubbed CVE-2024-49138, and has been given a CVSS score of 7.8, which notes a severity of ‘High.’ The vulnerability was found in the Windows Common Log File System, or CLFS; it can reportedly “open up Windows devices to full system compromise is under active exploitation,” and is based on a buffer overflow vulnerability. Details have been withheld for now until patches have been applied. (Forbes, Dark Reading, Security Week)

AI HEADLINES

MIT Researchers Develop Innovative Technique for Debiasing AI Models

Five researchers at MIT have created an innovative method for detecting and removing bias in AI models, which maintains overall accuracy of the model, improves accuracy for groups underrepresented in the data, and requires far less dataset modification than existing methods. The method works by “[isolating] and [removing] specific training examples that drive the model’s failures on minority groups, […] and does not require training group annotations or additional hyperparameter tuning.” The researchers are continuing to make their algorithm stronger and easier to use, and hope that in the future it can be used to “build models that are more fair and reliable” in real-world environments. (MIT, arXiv)

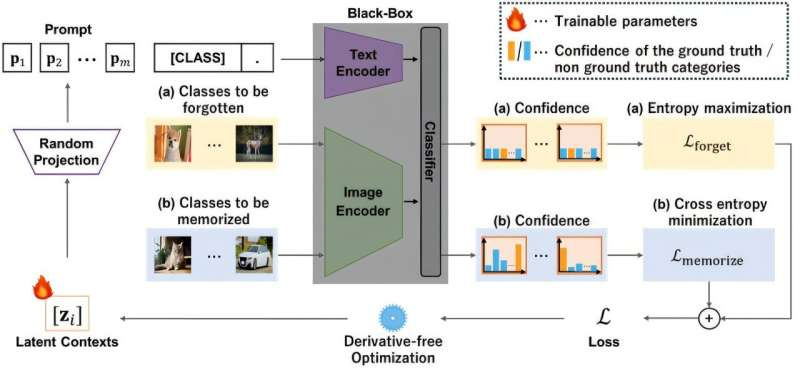

Tokyo University of Science Researchers Develop Method to Make AI Models Forget Training Data

Researchers at the Tokyo University of Science, led by Associate Professor Go Irie, have developed a technique dubbed ‘black-box forgetting’ in which it is possible to force a model to forget some of its capabilities and training data by presenting it with iteratively selective prompts. While other techniques can also produce these results, Professor Irie’s research is notable because it does not require access to the internals of the model. Applications of this research are numerous, from improving the computation and space requirements of specialized models to preventing models from generating objectionable content. It can also provide massive privacy benefits, especially as models are trained on potentially sensitive data. (TechXplore, Tokyo University of Science, AI News)

AI + CYBER HEADLINES

Sublime Security Raises $60 Million in Series B Funding to Improve Email Security with AI

Sublime Security, a startup that sells a “programmable email security platform for Microsoft 365 and Google Workplace,” has just secured $60 million in series B funding from IVP, Citi Ventures, Index Ventures, and others. Its work combines “AI-driven detection with a programmable rules engine to protect against phishing, malware and targeted Business Email Compromise,” and already boasts major clients like Spotify and Reddit. As of December 2024, the company’s total valuation is about $93.8 million. As artificial intelligence has become a tool for threat actors to craft increasingly stealthy cyberattacks, it becomes just as important for defenders to leverage it for detecting and preventing threats. (Security Week, PR Newswire, Sublime Security)

Project Naptime LLM Successfully Exploits Buffer Overflow Vulnerability

Project Naptime, a subset of Google’s Project Zero attempt to build a large language model that can successfully find and exploit security vulnerabilities, has created a model that successfully identifies and exploits buffer overflow vulnerabilities in applications. Not only has the model worked on test code, but it recently found a real zero-day vulnerability in SQLite, which was disclosed to and fixed by the SQLite development team. According to the Project Naptime researchers, “substantial progress is still needed before these tools can have a meaningful impact on the daily work of security researchers;” however, this breakthrough marks significant progress towards developing AI-powered offensive security research. (Project Zero, Forbes)

Thanks for reading this week’s newsletter! If you have news of an interesting novel development, reach out and we may include your story in our next post!

Until next time,