Biggest data breach ever, LLMs truly understand what they say, and AI can read minds

Frontiers of AI & Cybersecurity from the provider of seed capital to startups pushing boundaries of AI & Cybersecurity

“Anything that could give rise to smarter-than-human intelligence—in the form of Artificial Intelligence, brain-computer interfaces, or neuroscience-based human intelligence enhancement – wins hands down beyond contest as doing the most to change the world. Nothing else is even in the same league.”

– Eliezer Yudkowsky

Welcome to the first newsletter focused solely on the convergence of AI and Cybersecurity! Artificial Intelligence is a massively influential new technology that’s impacting business and society in unprecedented and unforeseen ways. As malicious attackers begin to use AI, Cybersecurity will be our biggest tool to combat hacks, fraud, disinformation, and deep fakes.

Look out for our emails every other week to stay updated on the most revolutionary advancements of our age!

Our first post will discuss some of the most exciting stories and developments from 2023 and early 2024.

CYBERSECURITY

Biggest Data Breach of All Time Reveals 26 Billion User Records:

26 billion online accounts worldwide have been compromised and leaked in the Mother of All Breaches, likely to be crowned the largest data breach of all time. Usernames, emails, passwords, and more are stored in a 12-terabyte database that fulfills the biggest dreams of malicious hackers around the world. Users everywhere need to be alert for identity theft, and phishing emails. Security experts urge people to check whether their information has been leaked on Have I Been Pwned, enable MFA on all accounts, and reset their passwords. (Techopedia, IT Security Guru)

Dozens of Zero-Day Vulnerabilities in Tesla and Other Electric Car Systems Discovered:

A security contest organized by Trend Micro uncovered more than 50 zero-day vulnerabilities in electric vehicle operating systems, hardware, and components, including several that led to root access on Tesla hardware. Tesla and the other developers of the affected systems have 90 days to patch the vulnerabilities before they are publicly released. Expert Renaud Feil notes that “the attack surface of the car is growing [...], because manufacturers are adding wireless connectivities, and applications that allow you to access the car remotely over the Internet.” Users of electric vehicles must ensure their cars remain fully up-to-date, especially as these critical patches are released over the next few months. (Darkreading, Bleeping Computer)

AI

Theory Suggests that LLMs Demonstrate Creativity and Language Understanding:

New research indicates that the largest LLMs may be able to develop creativity, originality, and legitimate understanding of language, disproving the idea that they are nothing more than “stochastic parrots,” which can only generate text by combining information from the training data. It suggests that large LLMs could be able to learn and combine skills like metaphor, statistical syllogism, or self-serving bias in ways that are “statistically impossible” to exist in training data. One researcher believes that “LLMs are able to put building blocks together that have never been put together [...] this is the essence of creativity.” (Quanta Magazine)

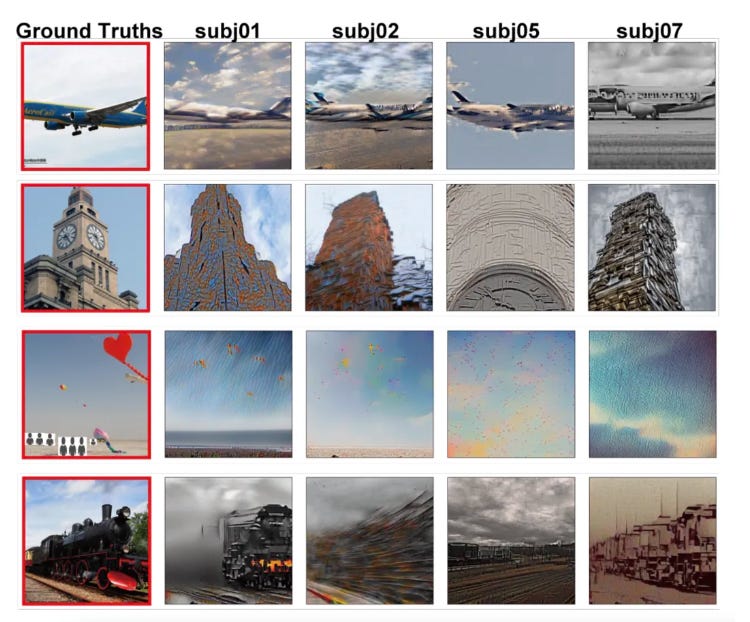

AI Can Read Human Minds to Copy Images from Thoughts:

Researchers have linked image-generating artificial intelligence to human brainwaves, and are now demonstrating its ability to recreate images based on human brainwaves. Images recreated by AI from each subject’s brainwave data are remarkably close to the ground truths, though the technology requires significant training data from each subject to achieve this accuracy. Experts debate whether using this technology for surveillance or interrogations is ethical, and some are concerned about what will happen when for-profit companies improve and commercialize the technology. Society could see anything from revolutionary neuroscience treatments to science-fiction style mind-reading within the next two decades. (Smithsonian, NBC)

CYBERSECURITY AND AI

New AI Attack Can Steal Keystrokes by Listening to Typing:

Keyloggers have been combined with AI to create a new way of predicting and stealing keystrokes simply by listening to the sound of typing. A deep learning model trained on a MacBook Pro keyboard has demonstrated that after recording and analyzing the sound of typing from either a phone recorder or Zoom, predicting the actual letters typed can be done with 95% accuracy. This means that those who type their passwords or sensitive information may soon find the data corresponding to those accounts captured or compromised. (Bleeping Computer)

Deepfaked Biden Asks Democratic Voters to skip Primaries:

“The political deepfake moment is here” as this week, a suspected AI-generated robocall featuring President Biden’s voice appears to tell Democratic voters to skip the US presidential primary elections. Public officials and cybersecurity experts are stringently warning that true “election chaos” could be on the horizon. While this robocall is known to be a fake, it is very possible that in time, experts will no longer be able to discern real media from AI-generated fakes. Even now, companies like ElevenLabs (a startup centered on voice-replication software newly valued at $1.1 billion) are expected to propel AI voice and video generation to unprecedented heights. (NBC, TIME, Bloomberg)

ANNOUNCEMENTS

Panel on Enterprise AI, Use Cases and Challenges with McKinsey & Harvard Business School

Oak Seed Ventures partner Chee-We is organizing a panel together with McKinsey and Harvard Business School Alumni Association of Silicon Valley on “Enterprise AI - use cases and challenges” on 2/12/2024 in McKinsey San Francisco office. If you’re in the area, feel free to sign-up:

https://hbsanc.org/events/134398

Thanks for reading this week’s newsletter! If you have news of an interesting novel development, reach out and we may include your story in our next post!

Until next time,